Neovulga – Vulgarized Knowledge – Adversarial attacks

Neovulga – Vulgarized Knowledge – Adversarial attacks

At Neovision, scientific monitoring is key to stay state of the art. Every month, the latest advances are presented to the entire team, whether it is new data sets, a new scientific paper… We screen almost all the news. In our ambition to make AI accessible to everyone, we offer you, every month, a simplified analysis of a technical topic presented by our R&D team.

Today, we will introduce adversarial attacks.

What is an adversarial attack?

Adversarial attacks trick neural networks. In the framework of classification algorithms, a set of altered data inserted as input leads the algorithm to misclassify said data. The alterations are almost imperceptible, at least for the human eye.

Note that this article is particularly interested in attacks against computer vision systems.

Different types of adversarial attacks

Targeted or untargeted

In an untargeted attack, we want the proposed output classification to be wrong. It doesn’t matter what the output is, what matters is that the results be wrong. On the contrary, a targeted attack leads to classify a specific thing in output. An example with a marine animal classifier: I may want the model to systematically predict cats.

White box, black box, no box

Another classification of adversarial attacks is to differentiate them according to the amount of information needed before attacking an algorithm.

If the attacker has a lot of knowledge about the model (its architecture, its parameters…) and is able to execute things on it, the attack will be qualified as “white box”. If he has only little information, such as the input and output of the model, the attack will be “black box”. Finally, in a “no box” attack, the model is completely opaque and no information is available about it.

In general, the attacks are tested on “white box” models, then we try to generalize towards “black box” and finally “no box”.

Perceptible or imperceptible

If the human is able to perceive the disturbance made to the image, the attack is perceptible. If not, it is imperceptible.

Physical or digital

If the disturbance is made through physical objects, the attack is qualified as physical. These attacks can be done thanks to stickers, as we will see in the following part. Conversely, a digital attack is perpetrated through a computer.

Specific or universal

The attack is considered specific when it works on a specific architecture. Otherwise, if it can be transferred to other architectures or generalized, it is considered universal.

L’édito de Soufiane

“Deep neural networks are powerful, cutting-edge tools, but remaining misunderstandings may someday hurt us. As sophisticated as they are, they are very vulnerable to small attacks that can drastically alter their results. As we dig deeper into the capabilities of our networks, we need to examine how those networks actually work to build more robust security.

As we move into a future that increasingly incorporates AI and deep learning algorithms into our daily lives, we must be careful to remember that these models can be fooled very easily. Although these networks are, to some extent, biologically inspired and exhibit near (or super) human capabilities in a wide variety of tasks, conflicting examples teach us that the way they operate is nothing like that of real natural creatures. As we have seen, neural networks can fail quite easily and catastrophically, in ways that are totally foreign to us humans.

In conclusion, these attacks should make us more humble. They show us that, although we have made great scientific progress, there is still a lot of work to be done.”

Examples of attacks

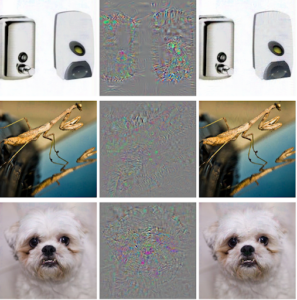

One of the most famous examples is the one aimed at AlexNet, an architecture of convolutional neural networks. You don’t notice it, and that’s normal, but all the images you can see to the right of the first image are classified as ostriches. This is because of the addition of imperceptible noise to the input data.

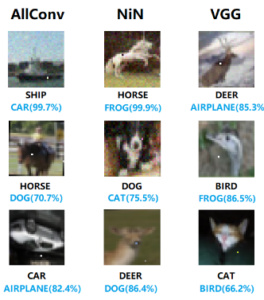

As you can see, it doesn’t take much to fool some neural networks. As little as the change of a single pixel can be enough. This is the principle of the so-called “One Pixel Attack”. This leads to a horse, which is also quickly classified as a frog, as in the second example.

Some attacks take place in the physical world, thanks to stickers. These stickers placed next to the object to be classified force the model to ignore it. The latter is thus classified as something else, namely a toaster in this example. If we were to qualify this attack among the different types mentioned in the previous section, we can say that in addition to being targeted, it is also robust and universal. This type of sticker is directly printable from the original paper. At the time, it was even possible to buy them on Amazon. Beyond stickers, T-shirts were even produced with the design. When a person wore it, it was systematically ignored by the algorithm.

While these examples may seem trivial, these attacks could have much more serious implications. Let’s take the example of the autonomous vehicle. In order to integrate the traffic rules in force, it is necessary to read the signs. However, a simple sticker placed on a speed limit sign can lead to a misclassification of the sign. An autonomous car could then end up driving at 110 km/h instead of 50. The consequences would be disastrous, not to mention that an accident is inevitable..

How to fend them off?

While some defenses have been developed, none are proven enough to become universal. A research paper titled “Adversarial Examples Are Not Easily Detected: Bypassing Ten Detection Methods” published in 2017 reviews ten defense methods. If you thought these were effective, you are actually wrong. The paper demonstrates that it is easy to bypass the defense method once you know which one is applied. Since all the defenses are widely known, it is possible to use a brute force method to defeat the one that is used.

In the end, only one defense remains today: adversarial training. This method consists in adding adversarial examples recursively during training. The model is trained to recognize both the image and its adversarial example. This allows the model to classify these examples and to become immune to certain attacks. However, this technique is not without its flaws. In addition to being quite resource-intensive, it slows down the training phase considerably. It also only works on small images and can cause overfitting problems.

The research field is very active and new methods will quickly replace the old ones. The efforts will be reinforced by the massive awareness of the existing security flaws in the algorithms.